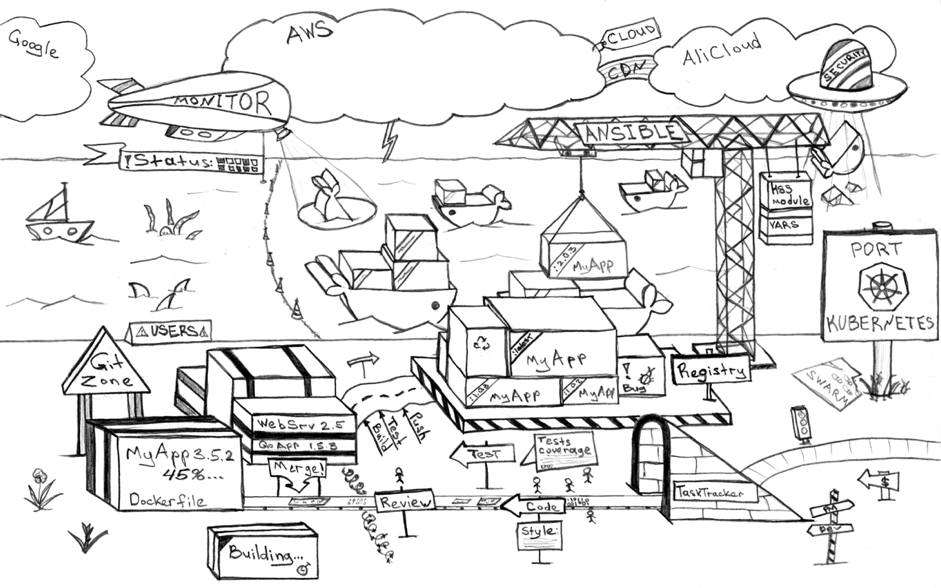

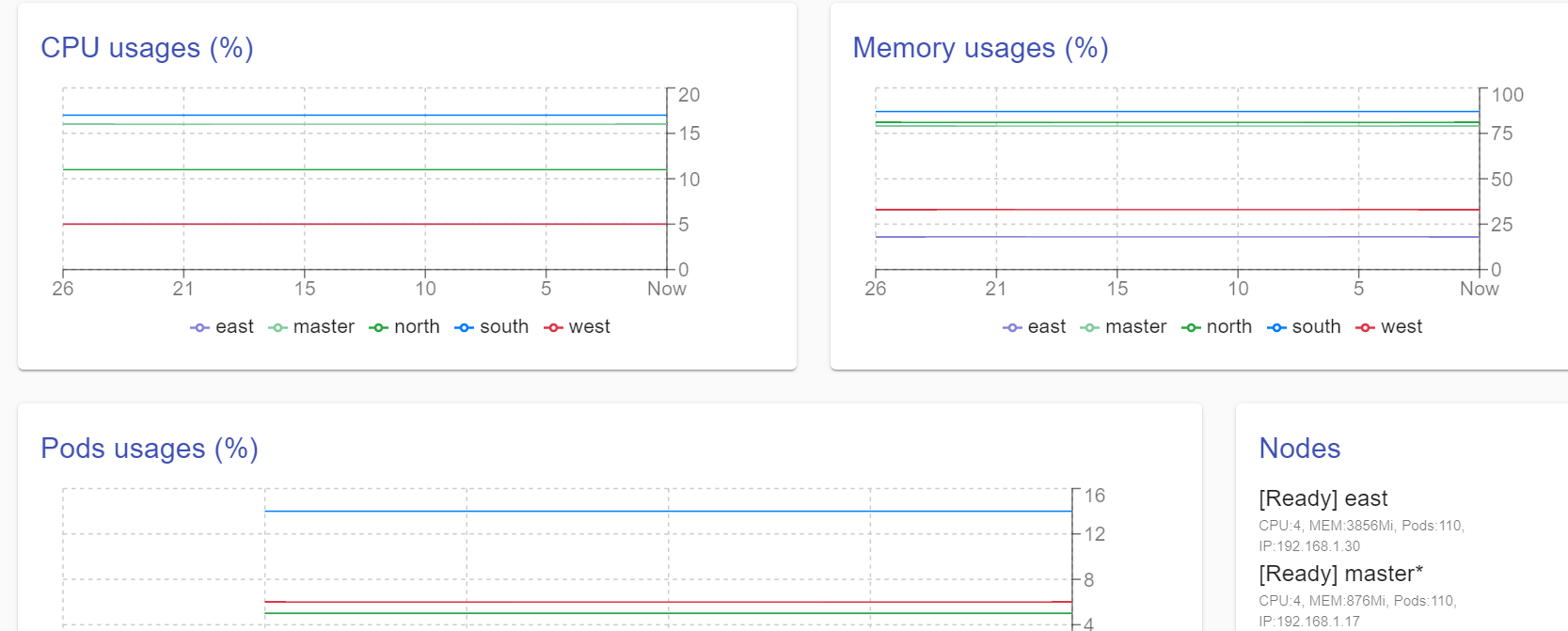

Now we have a ready K8S cluster, we will start to develop, build and deploy some applications.

Let's check cluster health,

NAME STATUS ROLES AGE VERSION

east Ready <none> 3d23h v1.17.4

master Ready master 66d v1.17.1

north Ready <none> 66d v1.17.1

south Ready <none> 66d v1.17.1

west Ready <none> 3d23h v1.17.4So, to make an operational CI/CD pipeline, we need a good orchestrator like Jenkins. Go to deploy it.

Orchestrator

We will create a namespace named "jenkins" with a small PVC for master:

apiVersion: v1

kind: Namespace

metadata:

name: jenkins

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: jenkins-master-pvc

namespace: jenkins

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2GiNext, we will create a master deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins-master

namespace: jenkins

labels:

app: jenkins-master

spec:

replicas: 1

selector:

matchLabels:

app: jenkins-master

template:

metadata:

labels:

app: jenkins-master

spec:

containers:

- name: jenkins-master

image: medinvention/jenkins-master:arm

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

- containerPort: 50000

volumeMounts:

- mountPath: /var/jenkins_home

name: jenkins-home

volumes:

- name: jenkins-home

persistentVolumeClaim:

claimName: jenkins-master-pvc

The master container exposes 2 ports; 8080 for Web access and 5000 for JNLP communication used by slave (Jenkins executor).

Let's build 2 services to expose ports

apiVersion: v1

kind: Service

metadata:

name: jenkins-master-service

namespace: jenkins

spec:

ports:

- name: http

port: 80

targetPort: 8080

selector:

app: jenkins-master

---

apiVersion: v1

kind: Service

metadata:

name: jenkins-slave-service

namespace: jenkins

spec:

ports:

- name: jnlp

protocol: TCP

port: 50000

targetPort: 50000

selector:

app: jenkins-master And finally, the ingress component to access Jenkins GUI from outside of cluster

apiVersion: v1

kind: Secret

metadata:

name: jenkins-tls

namespace: jenkins

data:

tls.crt: {{crt}}

tls.key: {{key}}

type: kubernetes.io/tls

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: jenkins-master

namespace: jenkins

labels:

app: jenkins-master

spec:

rules:

- host: {{host}}

http:

paths:

- backend:

serviceName: jenkins-master-service

servicePort: http

path: /

tls:

- hosts:

- {{host}}

secretName: jenkins-tls

You must replace {{host}} by your domain, {{crt}} and {{key}} by your SSL Certificate and Private key encoded base 64.

Nice, we have a master of Jenkins deployed

jenkins jenkins-master-cww 1/1 Running 4d4h 10.244.2.150 northExecutor

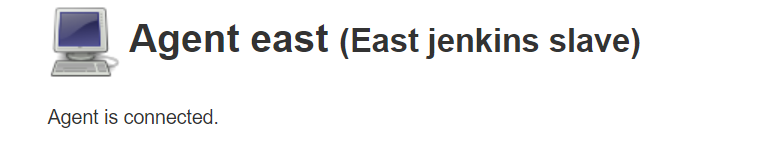

To build our application, we will use a Jenkins slave node to preserve master from surcharge.

You must configure a new node with "administration section" to get a secret token needed by slave before deploying it.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: jenkins-slave-pvc

namespace: jenkins

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins-slave

namespace: jenkins

labels:

app: jenkins-slave

spec:

replicas: 1

selector:

matchLabels:

app: jenkins-slave

template:

metadata:

labels:

app: jenkins-slave

spec:

containers:

- name: jenkins-slave

image: medinvention/jenkins-slave:arm

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "256Mi"

cpu: "250m"

limits:

memory: "512Mi"

cpu: "250m"

env:

- name: "JENKINS_SECRET"

value: "{{jenkins-secret}}"

- name: "JENKINS_AGENT_NAME"

value: "exec-1"

- name: "JENKINS_DIRECT_CONNECTION"

value: "jenkins-slave-service.jenkins.svc.cluster.local:50000"

- name: "JENKINS_INSTANCE_IDENTITY"

value: "{{jenkins-id}}"

volumeMounts:

- mountPath: /var/jenkins

name: jenkins-home

volumes:

- name: jenkins-home

persistentVolumeClaim:

claimName: jenkins-slave-pvc

nodeSelector:

name: eastReplace {{jenkins-secret}} by token available after creating node configuration on master, and replace {{jenkins-id}} by ID Token who is sent in HTTP Header response (use a simple curl to extract it , @see).

For our case, we add a "nodeSelector" to force pod assignment to specific node with more capacity.

After a little time, the new node will appear as connected and available.

The application

For this post, I will use a maven project composed by 3 modules, Java Core module, Spring Boot Backend module and an Angular Front module hosted in GitHub. It's a simple monitoring application for your K8S cluster.

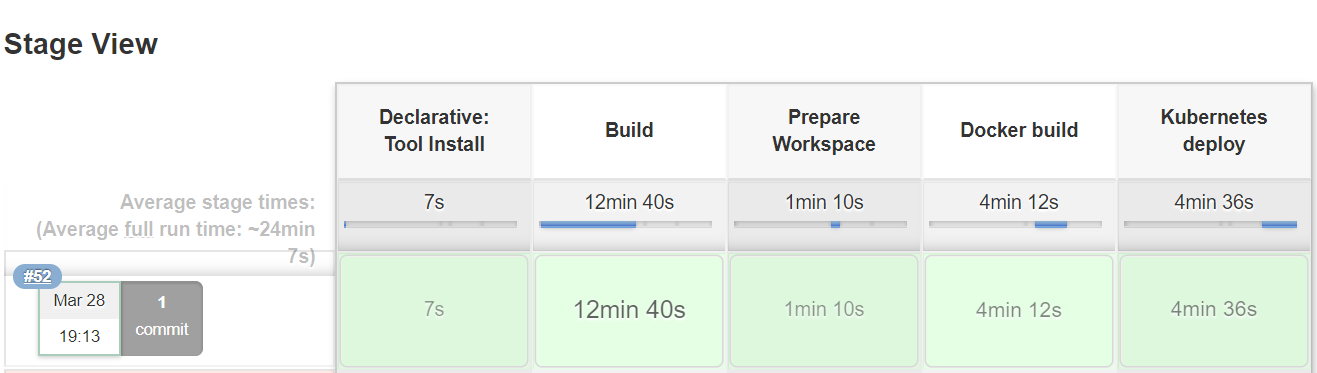

CI Pipeline

For our pipelines, we will use a declarative Jenkins pipeline file, we start by Build stage: checkout source and build artifact (Java by Maven and JS by Node)

pipeline {

agent {

label 'slave'

}

tools {

maven "AutoMaven"

nodejs "AutoNode"

}

stages {

stage('Build') {

steps {

//checkout

checkout([$class: 'GitSCM',

branches: [[name: '*/dev']],

doGenerateSubmoduleConfigurations: false,

extensions: [[$class: 'SubmoduleOption',

disableSubmodules: false,

parentCredentials: false,

recursiveSubmodules: true,

reference: '',

trackingSubmodules: false]],

submoduleCfg: [],

userRemoteConfigs: [[url: 'https://github.com/mmohamed/k8s-monitoring.git']]])

// Package

sh 'mkdir -p $NODEJS_HOME/node'

sh 'cp -n $NODEJS_HOME/bin/node $NODEJS_HOME/node'

sh 'cp -rn $NODEJS_HOME/lib/node_modules $NODEJS_HOME/node'

sh 'ln -sfn $NODEJS_HOME/lib/node_modules/npm/bin/npm-cli.js $NODEJS_HOME/node/npm'

sh 'ln -sfn $NODEJS_HOME/lib/node_modules/npm/bin/npx-cli.js $NODEJS_HOME/node/npx'

sh 'export NODE_OPTIONS="--max_old_space_size=256" && export REACT_APP_URL_BASE="https://{{apihostname}}/k8s" && export PATH=$PATH:$NODEJS_HOME/bin && export NODE_PATH=$NODEJS_HOME && mvn install'

// Copy artifact to Docker build workspace

sh 'mkdir -p ./service/target/dependency && (cd service/target/dependency; jar -xf ../*.jar) && cd ../..'

sh 'mkdir -p ./service/target/_site && cp -r ./webapp/target/classes/static/* service/target/_site'

}

}

....

}

}I have already configured Node and Maven tools configuration on "Administration section".

In this stage, we start by preparing tools and some environment variables for Node binaries, after we must change {{apihostname}} by our API application hostname for Front component building and call "maven install" goal.

After artifact building, we will extract jar content (it's made container starting faster) and copy ReactJs output files to target directory for copying it to Docker builder node.

Next stage, Prepare Docker workspace to build images: first copy target directory content to Docker workspace (we have defined a node credentials "SSHMaster" in Jenkins master), and we create and copy 2 Docker files, first with OpenJDK for backend java module and second with a simple Nginx for front Web module.

....

stage('Prepare Workspace'){

steps{

// Prepare Docker workspace

withCredentials([sshUserPrivateKey(credentialsId: "SSHMaster", keyFileVariable: 'keyfile')]) {

sh "ssh -i ${keyfile} [USER]@[NODEIP] 'mkdir -p ~/s2i-k8S/k8s-monitoring-$BUILD_NUMBER'"

sh "scp -i ${keyfile} -r service/target [USER]@[NODEIP]:~/s2i-k8S/k8s-monitoring-$BUILD_NUMBER"

}

// Create Dockerfile for api

writeFile file: "./Dockerfile.api", text: '''

FROM arm32v7/openjdk:8-jdk

ARG user=spring

ARG group=spring

ARG uid=1000

ARG gid=1000

RUN groupadd -g ${gid} ${group} && useradd -u ${uid} -g ${gid} -m -s /bin/bash ${user}

ARG DEPENDENCY=target/dependency

COPY --chown=spring:spring ${DEPENDENCY}/BOOT-INF/lib /var/app/lib

COPY --chown=spring:spring ${DEPENDENCY}/META-INF /var/app/META-INF

COPY --chown=spring:spring ${DEPENDENCY}/BOOT-INF/classes /var/app

USER ${user}

ENTRYPOINT ["java","-cp","var/app:var/app/lib/*","dev.medinvention.service.Application"]'''

// Create Dockerfile for front

writeFile file: "./Dockerfile.front", text: '''

FROM nginx

EXPOSE 80

COPY nginx.conf /etc/nginx/conf.d/default.conf

COPY target/_site/ /usr/share/nginx/html'''

// Create config for front

writeFile file: "./nginx.conf", text: '''

server {

listen 80;

server_name localhost;

location / {

root /usr/share/nginx/html;

try_files $uri /index.html;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}'''

// copy docker and config file

withCredentials([sshUserPrivateKey(credentialsId: "SSHMaster", keyFileVariable: 'keyfile')]) {

sh "scp -i ${keyfile} Dockerfile.api [USER]@[NODEIP]:~/s2i-k8S/k8s-monitoring-$BUILD_NUMBER"

sh "scp -i ${keyfile} Dockerfile.front [USER]@[NODEIP]:~/s2i-k8S/k8s-monitoring-$BUILD_NUMBER"

sh "scp -i ${keyfile} nginx.conf [USER]@[NODEIP]:~/s2i-k8S/k8s-monitoring-$BUILD_NUMBER"

}

}

}

....Now, we can start building our images with Docker build stage.

....

stage('Docker build'){

steps{

withCredentials([sshUserPrivateKey(credentialsId: "SSHMaster", keyFileVariable: 'keyfile')]) {

sh "ssh -i ${keyfile} [USER]@[NODEIP] 'docker build ~/s2i-k8S/k8s-monitoring-$BUILD_NUMBER -f ~/s2i-k8S/k8s-monitoring-$BUILD_NUMBER/Dockerfile.api -t medinvention/k8s-monitoring-api:arm'"

sh "ssh -i ${keyfile} [USER]@[NODEIP] 'docker push medinvention/k8s-monitoring-api:arm'"

sh "ssh -i ${keyfile} [USER]@[NODEIP] 'docker rmi medinvention/k8s-monitoring-api:arm'"

sh "ssh -i ${keyfile} [USER]@[NODEIP] 'docker build ~/s2i-k8S/k8s-monitoring-$BUILD_NUMBER -f ~/s2i-k8S/k8s-monitoring-$BUILD_NUMBER/Dockerfile.front -t medinvention/k8s-monitoring-front:arm'"

sh "ssh -i ${keyfile} [USER]@[NODEIP] 'docker push medinvention/k8s-monitoring-front:arm'"

sh "ssh -i ${keyfile} [USER]@[NODEIP] 'docker rmi medinvention/k8s-monitoring-front:arm'"

}

}

}

....Don't forget to replace [USER] and [NODEIP] by your docker build node (it's can be any of available cluster node)

And finally, start Kubernetes deployment stage.

....

stage('Kubernetes deploy'){

steps{

// deploy

withCredentials([string(credentialsId: 'KubeToken', variable: 'TOKEN'),

string(credentialsId: 'TLSKey', variable: 'KEY'),

string(credentialsId: 'TLSCrt', variable: 'CRT')

]) {

sh "export TOKEN=$TOKEN && export CRT=$CRT && export KEY=$KEY"

sh "cd k8s && sh deploy.sh"

}

}

}

....For deployment, we can choose Helm packager to make our deployment more industrial or we can make our deployment script like this case

#!/bin/bash

if [ -z "$CRT" ] || [ -z "$KEY" ]; then

echo "TLS CRT/KEY environment value not found !"

exit 1

fi

if [ -z "$TOKEN" ]; then

echo "Kube Token environment value not found !"

exit 1

fi

echo "Get Kubectl"

curl -s -LO https://storage.googleapis.com/kubernetes-release/release/v1.17.0/bin/linux/arm/kubectl

chmod +x ./kubectl

commitID=$(git log -1 --pretty="%H")

if [ $? != 0 ] || [ -z "$commitID" ]; then

echo "Unable to determinate CommitID !"

exit 1

fi

echo "Deploy for CommitID : ${commitID}"

# create new deploy

sed -i "s|{{crt}}|`echo $CRT`|g" api.yaml

sed -i "s|{{key}}|`echo $KEY`|g" api.yaml

sed -i "s|{{host}}|[BACKENDHOSTNAME]|g" api.yaml

sed -i "s|{{commit}}|`echo $commitID`|g" api.yaml

./kubectl --token=$TOKEN apply -f api.yaml

if [ $? != 0 ]; then

echo "Unable to deploy API !"

exit 1

fi

# wait for ready

attempts=0

rolloutStatusCmd="./kubectl --token=$TOKEN rollout status deployment/api -n monitoring"

until $rolloutStatusCmd || [ $attempts -eq 60 ]; do

$rolloutStatusCmd

attempts=$((attempts + 1))

sleep 10

done

# create new deploy

sed -i "s|{{crt}}|`echo $CRT`|g" front.yaml

sed -i "s|{{key}}|`echo $KEY`|g" front.yaml

sed -i "s|{{host}}|[FRONTHOSTNAME]|g" front.yaml

sed -i "s|{{commit}}|`echo $commitID`|g" front.yaml

./kubectl --token=$TOKEN apply -f front.yaml

if [ $? != 0 ]; then

echo "Unable to deploy Front !"

exit 1

fi

# wait for ready

attempts=0

rolloutStatusCmd="./kubectl --token=$TOKEN rollout status deployment/front -n monitoring"

until $rolloutStatusCmd || [ $attempts -eq 60 ]; do

$rolloutStatusCmd

attempts=$((attempts + 1))

sleep 10

doneIn this script, we start by installing local "kubectl" client, and check "kube" access token. Next we try to deploy backend first and if we have problem with, we stop deployment process. If success deployment of backend, we proceed with front deployment.

To make Jenkins access to cluster, we need to generate an access token with a ClusterRoleBinding resource.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: jenkins-access

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: jenkins-access

namespace: jenkinsAfter running this command to get token, create a secret credential for Jenkins with token content

kubectl -n jenkins describe secret $(kubectl -n jenkins get secret | grep jenkins-access | awk '{print $1}') And finally, for backend deployment

---

apiVersion: v1

kind: Namespace

metadata:

name: monitoring

---

apiVersion: v1

kind: Secret

metadata:

name: monitoring-tls

namespace: monitoring

type: Opaque

data:

tls.crt: {{crt}}

tls.key: {{key}}

type: kubernetes.io/tls

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: monitoring-ingress

namespace: monitoring

labels:

app: api

spec:

rules:

- host: {{host}}

http:

paths:

- backend:

serviceName: monitoring-service

servicePort: http

path: /

tls:

- hosts:

- {{host}}

secretName: monitoring-tls

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: api

namespace: monitoring

labels:

app: api

spec:

replicas: 1

selector:

matchLabels:

app: api

template:

metadata:

labels:

app: api

commit: '{{commit}}'

spec:

serviceAccountName: api-access

containers:

- name: api

image: medinvention/k8s-monitoring-api:arm

imagePullPolicy: Always

ports:

- containerPort: 8080

readinessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 60

timeoutSeconds: 2

periodSeconds: 3

failureThreshold: 1

livenessProbe:

httpGet:

path: /health

port: 8080

initialDelaySeconds: 300

timeoutSeconds: 5

periodSeconds: 60

failureThreshold: 1

---

apiVersion: v1

kind: Service

metadata:

name: monitoring-service

namespace: monitoring

spec:

ports:

- name: http

port: 80

targetPort: 8080

selector:

app: api

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

name: api-access

namespace: monitoring

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: api-access

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: api-access

namespace: monitoringAnd the front

apiVersion: v1

kind: Secret

metadata:

name: front-tls

namespace: monitoring

type: Opaque

data:

tls.crt: {{crt}}

tls.key: {{key}}

type: kubernetes.io/tls

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: front

namespace: monitoring

labels:

app: front

spec:

rules:

- host: {{host}}

http:

paths:

- backend:

serviceName: front-service

servicePort: http

path: /

tls:

- hosts:

- {{host}}

secretName: front-tls

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: front

namespace: monitoring

labels:

app: front

spec:

replicas: 1

selector:

matchLabels:

app: front

template:

metadata:

labels:

app: front

commit: '{{commit}}'

spec:

containers:

- name: front

image: medinvention/k8s-monitoring-front:arm

imagePullPolicy: Always

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: front-service

namespace: monitoring

spec:

ports:

- name: http

port: 80

targetPort: 80

selector:

app: front

Important: if you use same image tag with every build, we must specify "imagePullPolicy: Always" to force kube pulling image with every deployment.

Result

The S2I Job

It's done, you have an operational CI/CD pipeline for your development environment. When you push a new code, your pipeline will be executed automatically to test, build, and deploy your application.

Complete source code available @here.